- Monday Momentum

- Posts

- The Efficiency Rebellion

The Efficiency Rebellion

Why smart teams are choosing 7B over 405B and the 100x cost advantage reshaping architecture decisions

Happy Monday!

Here's the conversation nobody's having at AI conferences: while Anthropic raises at a $350 billion valuation and OpenAI sits at $500 billion, the actual architecture decisions happening inside engineering teams are going the opposite direction.

Small Language Models are eating Large Language Models from the bottom up. And if you're building production AI systems, you've probably already noticed.

The economics are absurd. Running a 7-billion-parameter model costs 100x less than a frontier LLM for the same query volume. This isn't an incremental improvement, it's a different cost structure entirely. It changes what you can build, how you can price it, and whether your unit economics ever make sense.

But the real story isn't just cost. It's that small models are now good enough for most of what enterprises actually need AI to do. The capability gap closed faster than anyone expected. And the implications are bigger than just switching out an API endpoint.

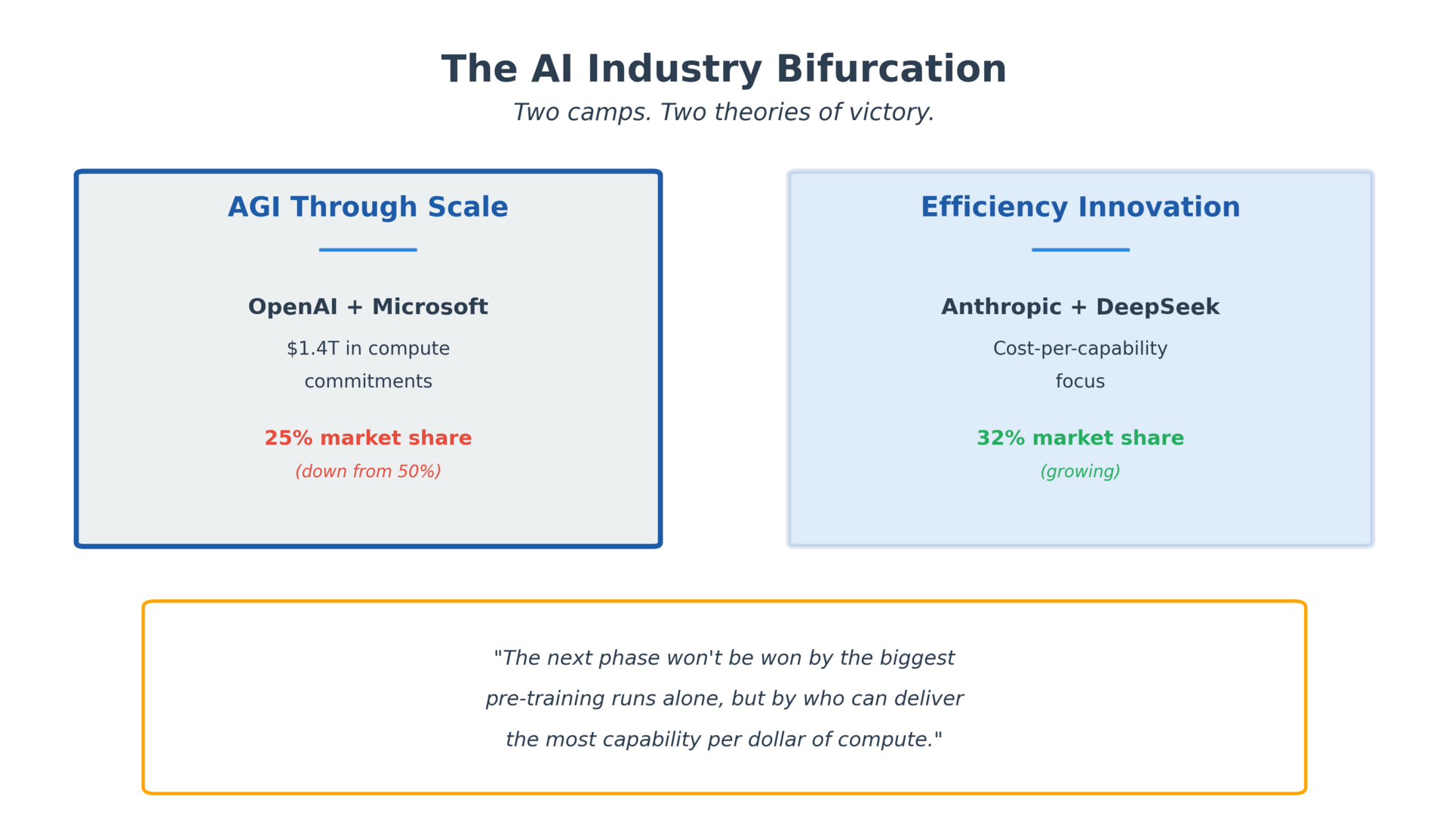

While VCs pour billions into frontier models, production teams are quietly going the opposite direction. Small Language Models cost 100x less to run and often work better for specific tasks. The industry is bifurcating: one camp betting on AGI through scale, the other on efficiency through algorithmic innovation. Anthropic's 32% market share (vs OpenAI's 25%) suggests enterprise buyers have made their choice. The real question for builders: which model for which task, and how do you orchestrate between them?

The Cost Math That Changes Everything

Processing 1 million conversations monthly costs $15,000 to $75,000 with frontier LLMs. The same workload on small models costs $150 to $800.

A customer support system handling 10 million queries per month goes from $150K-$750K monthly to $1,500-$8,000. That's the difference between "this will never have positive unit economics" and "we can actually make money on this."

Training costs follow the same pattern. Frontier LLMs cost north of $100 million to train. You can fine-tune a domain-specific 7B model for the cost of a single enterprise sales cycle.

The cost advantage compounds. Because small models are cheaper to run, you can afford to run them more. Fine-tune multiple versions for different use cases. A/B test aggressively. Deploy on-device or at the edge. The entire development cycle changes when compute cost stops being a constraint.

"LLMs cost $15K-$75K per million conversations. SLMs cost $150-$800. The math isn't close."

The economics tell two stories: 100x cost reduction with small models, and enterprise buyers voting with their wallets.

The Capability Convergence Nobody Saw Coming

Two years ago, the gap between frontier models and small models was enormous. That gap closed fast.

Models like Phi-3-mini and Mistral 7B now run on a single GPU or even CPUs, with sub-second latency. For specific tasks, especially domain-focused applications, they match or beat larger models.

The key phrase is "specific tasks." Small models aren't general intelligence engines. They're specialists. Most production use cases need specialists, not generalists.

Customer support triage doesn't need to understand quantum physics. Fraud detection doesn't need creative writing capability. Code completion doesn't need to pass the bar exam.

Strip away capabilities you don't need, and you get something faster, cheaper, and often more reliable than the kitchen-sink approach.

Healthcare, law, and finance are leading this shift because they can't pipe sensitive data to cloud APIs. These regulated industries need on-premise deployments, explainability, and domain expertise that generic models don't have.

A 7B model trained on your company's support tickets, product documentation, and resolved cases will outperform GPT-4 for your specific support workflow without sending customer data to OpenAI's servers.

Where Small Models Dominate

Latency-critical applications. Real-time fraud detection, on-device assistants, and anything needing sub-second response times without network round-trips.

High-volume, routine tasks. The workhorses of enterprise AI like chatbots handling common questions, document classification, and sentiment analysis at scale don't need frontier capabilities.

Edge and mobile. IoT devices, mobile assistants, and offline environments where you can't run 405B parameters on a phone, but you can run 7B.

Regulated environments. Healthcare, finance, and law where data can't leave the premises and compliance requirements make cloud APIs a non-starter.

Agentic systems. Agents benefit from fast, cheap, reliable task completion more than maximum capability. Calling a small model 100 times for the cost of one frontier call unlocks more sophisticated workflows.

Retailers use small models for personalized recommendations at scale. Financial firms deploy them locally for real-time fraud detection. Healthcare systems run domain-specific models that understand medical terminology without hallucinating drug interactions.

The Hybrid Architecture Reality

Engineering teams that moved beyond proof-of-concept aren't choosing LLM vs SLM. They're building hybrid architectures.

Start with cloud services to get something working. Identify high-volume, routine tasks killing your API costs. Move those to small models. Keep complex reasoning on large models.

A typical production architecture: small model handles triage, classification, and routine queries. Large model handles complex reasoning, edge cases, and anything requiring broad knowledge. Orchestration layer routes requests based on complexity.

The orchestration layer is becoming a core competency. Being able to route intelligently between models matters more than having access to the biggest one.

This isn't theoretical. It's happening in production right now.

The Strategic Divergence in the Model Market

While this is happening in production, the market is bifurcating.

Anthropic raised at $350B with 32% enterprise market share. OpenAI is at $500B with 25% share (down from 50% in 2023). Google holds 4 of the top 10 LLMArena models. OpenAI's best ranks eighth.

On one side: OpenAI and Microsoft with $1.4 trillion in compute commitments, betting on AGI through scale.

On the other: Anthropic's "do more with less" and DeepSeek's algorithmic innovations, both focusing on cost-per-capability over raw capability.

The next phase won't be won by the biggest pre-training runs alone, but by who can deliver the most capability per dollar of compute.

Enterprise buyers agree. Anthropic's market share growth and the shift to purchased solutions (76% of use cases bought vs built) suggest cost and deployment flexibility matter more than leaderboard position.

IBM predicts "2026 will be the year of frontier versus efficient model classes."

Two camps. Two theories of victory.

Two competing visions for AI's future: scale vs. efficiency. The market is choosing sides.

The Bottom Line

The future of AI is pluralistic.

Large models will push the frontier, handle complex reasoning, and drive headlines.

Small models will handle the actual workload, deliver efficiency and domain expertise, and make the unit economics work.

The winners won't be teams with access to the biggest models. They'll be teams that know which model to use when, and how to orchestrate between them.

2026 is the year this becomes standard practice. The efficiency rebellion is already happening. In pull requests and architecture reviews, not press releases.

In motion,

Justin Wright

If small models are now good enough for most production tasks and cost 100x less to operate, what happens to the multi-billion-dollar valuations of companies whose entire moat is "we have the biggest model"?

Small Language Models for Your Niche Needs in 2026 - Hatchworks

SLM vs LLM: Accuracy, Latency, Cost Trade-Offs 2026 - Label Your Data

DeepSeek kicks off 2026 with paper signalling push to train bigger models for less - SCMP

What's next for AI in 2026 - MIT Technology Review

If you haven’t listened to my podcast Mostly Humans: An AI and business podcast for everyone yet, new episodes drop every week!

Episodes can be found below - please like, subscribe, and comment!